Depending on both your Server Specifications and your Server Setup, you may run into issues where it feels everything is grinding to a halt.

Before reading any further, I just need to make 2 things very clear:

- This is not a “how-to”, but instead a collection of notes

- This only relates to a very specific issue

The Issue

As I mentioned, this post relates to a very specific issue that results in the following 3 problems:

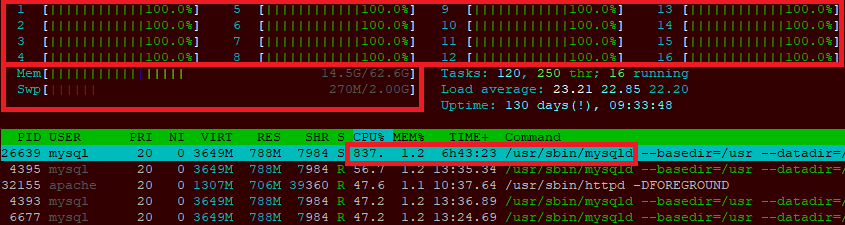

- All CPU cores max out at 100%

- Mem and Swp eventually max out at 100%

- MySQL process is running at incredibly high CPU usage

These issues are easily identifiable using the htop command.

Screenshot of htop

The Investigation

The experienced among you may look at this and think “well, it’s obviously mysql causing the issues” and you’d be 100% correct. But why?

At first glance, I simply assumed it could be down to one of the following issues:

- Super-high traffic

- Cron tasks bottlenecking

- A MySQL process is hanging

However, having looked into these I could see nothing obvious. So, that meant the issue was something beyond my expertise.

As you can see from the screenshot above, this is an Enterprise Grade Dedicated Server. So there is really no reason why it should ever be capping out for extended periods of time.

I even checked the MySQL Process List and there were only less than 15 processes being run at any one time.

The solution(s)?

After much deliberation, it turned out to be a potential oversight from my side. When the server went into Production, I never considered optimising the MySQL variables. This is something I should have looked at within the first couple of weeks of going live after studying the data.

The innodb_buffer_pool_size and innodb_log_file_size variables were still set to their default values. Based on the Server Specifications and the applications running on it, those values needed to be increased accordingly. (There’s no point me sharing these values as they are unique to this server setup).

Since adjusting these values and restarting mysql, all resources now appear to be running at optimal levels - Thus fixing the issue. If this changes, I’ll update this post accordingly.

Similar issues/solutions

At the beginning of this post, I mentioned that this solution is very specific to this issue. However, I can share with you 2 similar instances.

- I saw something similar when attempting to run Magento 2 on a server with just a single core once. Running Apache & MySQL on a single core is incredibly intensive for the CPU. Upgrading the server to a minimum of 2 Cores (plus 2GB memory) solved this.

- I used to run into an issue in Magento 2.2.x, where sometimes the cron tasks would bottleneck and cause the server to grind to a halt. This issue is evident when you look at the

cron_scheduletable in the Magento 2 database. Normally, there are around 1,000-5,000 rows. But when the issue is in full effect, this can be anything above 40,000 rows. I found the way to clear the bottleneck and fix the issue was to Truncate thecron_scheduletable and restart Apache/MySQL. I’ve not run into this issue again since upgrading to Magento 2.2.7.

Final Notes

I’m going to lock this post. However, feel free to create your own post if you’d like to discuss similar topics. Alternatively, if you find this post useful then please let me know by liking the post.